Artificial Intelligence: Separating Hype From Reality

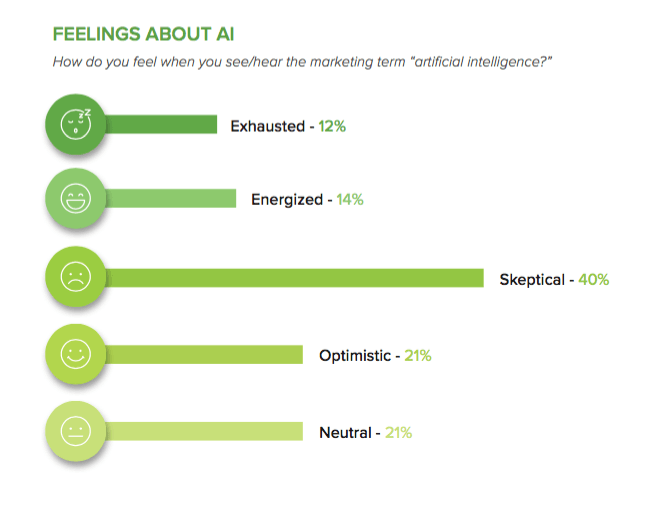

What do vendors actually mean when they say their products are “AI-driven”, or “contain built-in machine learning capabilities?” (if they mean anything at all). Certainly, technology buyers have become pretty cynical about the use of these terms to sell a product. A recent Resulticks survey, reported in Adweek, found that:

- 47% of marketers consider AI to be overhyped

- 20% of marketers think vendors don’t know what they are talking about

- 40% of marketers surveyed say that the expression “artificial intelligence” makes them feel skeptical, and 12% feel exhausted

Let’s take a closer look at these technologies and what they can do.

Artificial Intelligence (AI) is the broadest and vaguest term in this domain. AI has been around since the 1950s and is really a superset of machine learning and deep learning. The essence of AI is developing computer systems that can perform tasks that are normally thought to require human intelligence.

Hal, the artificially intelligent machine from the movie 2001: A Space Odyssey is probably the first thing that springs to mind in the popular imagination when somebody brings up AI. While at a practical level we may be a long way from Hal, there have been significant advances in the more limited domains underpinning AI: machine learning and deep learning.

Machine Learning vs. Deep Learning

- Machine Learning: Machine learning is an application of Artificial Intelligence that allows software to become more accurate in predicting outcomes without being explicitly programmed. The idea is that a model or algorithm is used to get data from the world, and that data is fed back into the model so that it improves over time. It’s called machine learning because the model “learns” as it is fed more and more data. The essence of a machine learning system is a self-driving car. Another good example is Amazon’s recommendation engine which gets better the more data it has to process.

- Deep Learning: Deep learning is one of the hottest topics in technology today and is really a specific subcategory of machine learning. Deep learning technology is inspired by the structure and function of the brain. What this means is that deep learning computers have artificial neural networks that are stacked on top of each other so that they can make connections a little like nodes in the brain. The premise is that computers can be made to mimic our own decision-making.

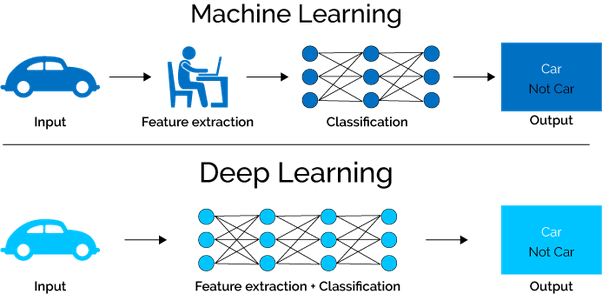

So what is the precise difference between machine learning and deep learning?

Let’s take an example of image recognition. If we are trying to teach a computer to tell the difference between a cat and a dog, we must provide the machine with many examples it can learn from. This is true of both machine learning and deep learning. But the techniques are different.

In machine learning image recognition, the first step is to manually select the relevant features of an image such as edges or corners or shapes in order to train the machine learning model. The model then references those features when analyzing and classifying new objects. So you start with an image, extract features from it, then you create a model that describes or predicts the object.

Deep learning is subtly different. In this case, you skip the manual step of extracting features from images, and instead, feed images directly into the deep learning algorithm which then predicts the object. Deep learning deals directly with images, and is often more complex requiring a few thousand images to get reliable results. You will also need a high-performance Graphical Processing Unit (GPU) to process huge data volumes at a reasonable speed.

Deep learning has become very popular because it is highly accurate. Unlike machine learning, you don’t have to understand which features are the best representation of the object – these are learned for you. But the large amount of data required means the model can take a long time to train.

Both of these technologies are potentially transformative and have already resulted in several practical applications.

Machine learning is used every day in the following areas:

- Malware detection: Most malware has a strong family resemblance and a machine learning model is more than capable of understanding the variations and predicting which files are malware.

- Stock trading: Automated, high-speed stock trading has become completely mainstream in the financial markets. Humans cannot process data with the speed and accuracy of a machine capable of making better and better predictions the more data it is exposed to.

- Healthcare: There are many machine learning applications in the healthcare domain. An obvious example is that machines are better at reading mammography scans than humans, with earlier detection and fewer false positives. Understanding risk factors in large populations is also a major application.

- Marketing personalization: Marketing techniques and tools like retargeting, email personalization, and recommendation engines can be fueled by machine learning technology which is able to understand customer preferences at a very granular level (and constantly improve over time) to increase the likelihood of a sale.

Deep learning also has many practical applications:

- Facial recognition: Detection of faces, and identification of people in images; recognition of facial expressions.

- Image parsing: Identification of objects in images like stop signs, traffic markings, pedestrians, etc. This is crucial for the training of self-driving cars.

- Voice recognition: Detection of human voices, transcription of speech to text, and recognition of sentiment in speech.

- Fraud identification: There are many fraud detection applications like Identifying fraudulent information in insurance claims, or detect fraudulent credit card transactions.

Are AI Terms Just Hype?

There can be no doubt that this technology is real and potentially transformative. The problem is that the terms describing these breakthroughs are used by marketers to indicate how advanced and superior their products are with respect to the rest of the market. Suddenly, it seems, almost every product has been improved by adding in some machine learning or AI pixie dust.

Some technology products are touting machine learning capabilities where there are none. The risk is that throwing the terms machine learning and AI around too loosely, and advertising products as machine learning-enabled when they are nothing of the kind can create a wave of disillusionment leading people to incorrectly assume that all AI is hype and has no real value.

Ask Vendors the Right Questions

The key issue here is the fact that buyers want to hear the brutal truth. How do buyers to tell if vendors touting AI capabilities are legitimate or not? While there are many questions worth asking vendors, the following checklist is a good starting point and should help you to separate hype from reality.

- Ask vendors to be very specific about capabilities, particularly around machine learning. The right question to ask is how the product learns on its own. If the product has been programmed with a set of rules that can only be updated manually, this is not a machine learning product (although it might be a perfectly serviceable product that solves a business need).

- Ask about the data that fuels the technology. But for both machine and deep learning, ask where the data is coming from and how big the data volumes are. For both types of learning significant quantities of well-maintained data are required. But, this is particularly important for deep learning, where very large data volumes are required to achieve meaningful results. It could be argued that the data is actually more important than the algorithms.

- Use your own data: Just because a demo works well using the vendor’s data, does not mean that it will work equally well using your data. If you can, provide a large data set, provide it to the vendor and have them use it in real time.

- Ask for reference customers. It is very important to ask for reference customers who are in production and actually using the product. Not just in proof of concept, but actually in production and achieving meaningful results.

- What problem is being solved? This is perhaps the most important question of all. Since AI is such a buzzword, the risk is that vendors are not yet solving concrete problems that customer should be willing to pay for. It is important that buyers understand exactly what pervasive problem the AI technology is solving, and whether the solution is worth paying for. The best way to do this is to include business leaders who understand customer problems in the product acquisition process. They will be able to understand how the product will fundamentally help the business (i.e., by acquiring more customers, automating manual tasks, streamlining hiring decisions, etc.) — and whether it is worth the investment.

Despite the many practical applications and the enormous promise of this technology, both vendors and buyers should remain focused on solving business problems.

- Buyers should not become too enamored of the latest buzzwords and take their eye of the business problem to be solved.

- Vendors should not exaggerate and should be cautious and truthful in their marketing materials, including these terms only when they are a very real component of the solution.

It’s worth remembering that the best technology solution to a business problem is often the simplest one.

Was this helpful?